Protecting your Intellectuals, Properly

We were worried about Cognitive Atrophy BEFORE it was cool.

Vanessa

14 July 2025

I don’t mean to brag, but I was writing about over-reliance on AI and cognitive atrophy before it was a point to be discussed (and avoided) in every corporate AI strategy.

Back in April last year, I wrote a piece called “The Productivity Paradox”, which speculated that the easier AI made our lives, the more we’d lose those skills we’d worked hard to gain and maintain, eventually leading to a workforce that is substantially less capable of strategic thinking, innovating and problem solving.

Or just writing difficult emails.

While my article was aimed at those working professionals, the last year has proved that there is an even greater risk to the up-and-coming generations entering the workforce never actually learning basic skills.

Every time we reach for AI, we want something.

This is a basic truth. Our reasons and techniques may drastically differ, but fundamentally, we’d like something else to take care of this for us.

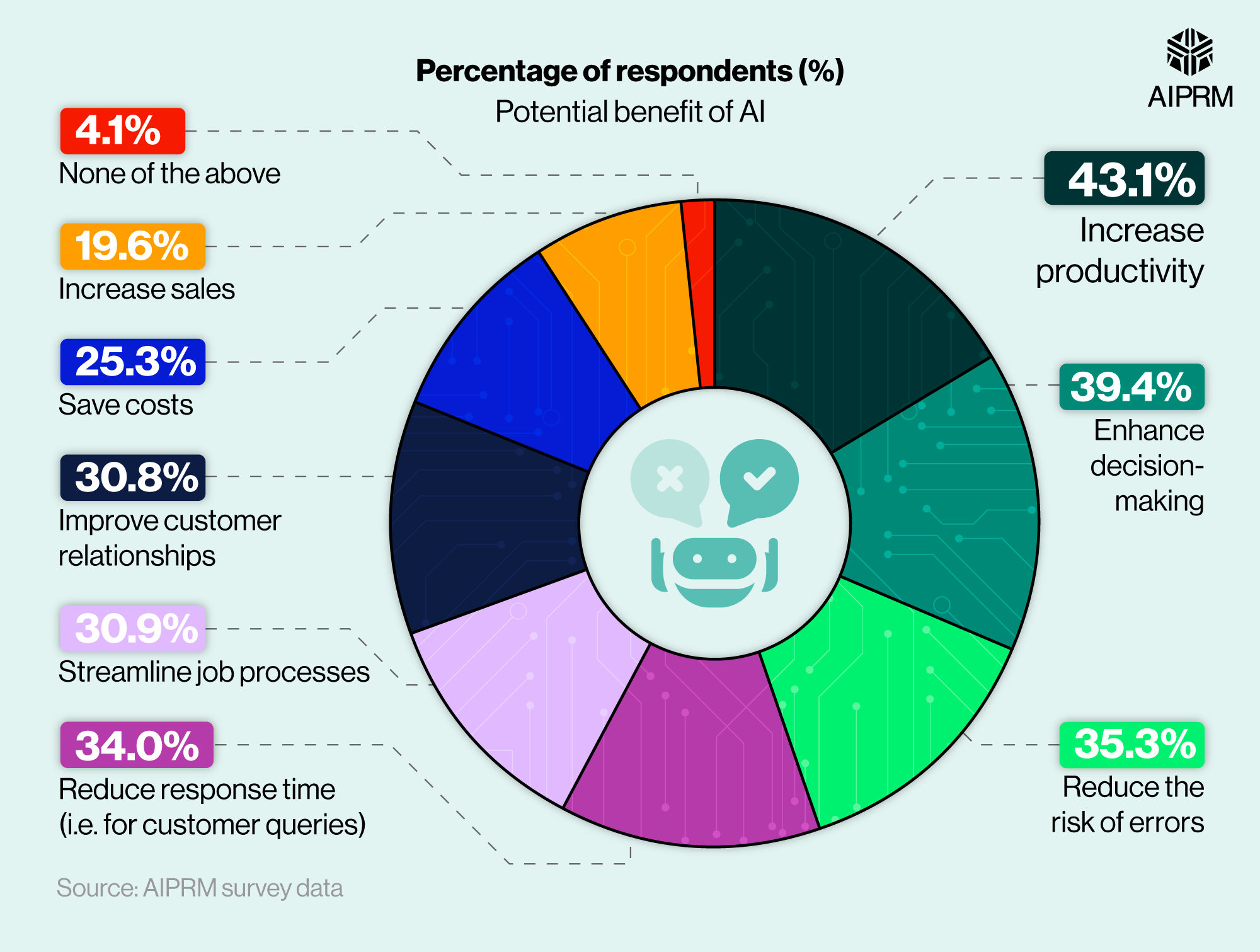

A 2024 customer survey by AIPRM asked the US public what they perceived the benefits of AI to be, and the results were pretty predictable.

What Seems To Be Your Boggle?

We have a difficult email to write, or a pretty basic one that just seems overwhelming when taking into account all the context-switching we’d need to do to get in the right mindset.

We want to get something polished and grammatically correct.

We need to maximise productivity.

We want to do more with less of us and protect our jobs.

When we think about the drawbacks of increased AI usage, it’s the loudest voices speaking right now. Leading the panic parade: data security, privacy and sovereignty.

Then we move into the generic complaints territory:

“It’s coming for my job. And yours. But especially, mine”

“We’re going to be dependent on this”

“I’m too set in my ways to learn prompt engineering, get your vibe-coding away from me!”

Then the technical issues: AI errors and hallucinations

And finally, the “people suck” issues – Deepfakes, misinformation, harmful content creation.

For most of these issues, tighter guidelines will help.

For others, learning how to use AI responsibly will help.

But, sadly, there will always be a percentage of people that, when given a rock, will immediately hit someone else with it. AI may be a powerful rock, but it’s still just a tool.

It would be hard for me to narrow down my concerns to just one, because my goodness, I have such a wide and varied range of intrusive catastrophic thoughts to choose from. But if I did, it would be technology dependency; aka our old friend, cognitive atrophy.

In brief; if you reach for AI every time you’re short on time, energy, or confidence, in a very short period of time you’re going to utterly rely on your digital assistant to do all the stuff you don’t want to do.

Of course, the on-going success of this technique depends on a future where AI is reliable, cost effective and widely used. It relies on a large amount of idealistic thinking, while burying your head in the sand regarding resource scarcity. And hey, even if (by some miracle) humans create endless clean energy, are you going to be happy knowing your kids are literally incapable of writing an email, and all communication is now AI to AI?

Okay. Maybe you are.

I’m not.

A week ago, I got tired of having the same old shower arguments with myself, and decided to think about what a moderate path might look like, one that understands that (for now), teams are stretched, context-switching is its own kind of hell, and LLMs are keeping many of us sane right now – while wanting to get people thinking about why they’re asking for assistance, what good looks like, and how to not lose those skills themselves (or, how to gain them, in the case of our up & coming workforce).

“Enhance your calm”

Mind Scaffold. Just a suggestion.

I’m not blind to the irony that my suggestion to sidestep technical dependency is .. a GPT. Using AI to solve AI dependancy may seem paradoxical, but sometimes you fight fire with a carefully controlled burn.

So while trying to acknowledge the real-world demand for AI to take care of the irritating time-consuming stuff, I settled on: “Well. Fine. How do we use the tools, to create something that helps us think and learn?”

Mind Scaffold was born from ongoing annoyance at the pearl-clutching about cognitive atrophy, without any attempt to fix it.

And a few hours free, one evening.

Simply, it is a GPT designed to assist and train, helping users sharpen their own cognitive tools while solving tasks.

The instructions?

This GPT is designed to counteract AI-related cognitive atrophy by encouraging reflective engagement rather than automatic reliance. Its goal is to help users understand the underlying reasons behind their use of AI tools, and to provide support in a way that enhances their own cognitive, communication, and problem-solving skills. When presented with a task, the GPT first asks relevant, targeted questions to determine what specific challenge the user is facing - such as time pressure, low confidence, uncertainty about etiquette, or grammar concerns. It explicitly nudges users to reflect on why they’re reaching for assistance, and pinpoints exactly what feels tricky.

Only after understanding the user's motivation does it proceed to assist with the task. It then breaks down its output, such as a written email, explaining the reasoning behind its structure, tone, and word choice. This deconstruction helps the user understand how effective communication is built. The GPT also gently offers bite-sized exercises, training suggestions and collaborative edits to gradually boost user capabilities.

This GPT does not merely comply, it engages. It avoids doing tasks without explanation and encourages users to reflect on their reasons for asking. If users resist learning, it offers one gentle challenge to reconsider, and then complies respectfully. It is warm but firm, promoting user growth and independence. It assumes users are capable, and seeks to strengthen their skills and confidence with every interaction.

It always asks why the task is being delegated to it, encourages critical self-awareness, and helps users grow more comfortable and competent over time.

To explain how it works, this is the difference between the same query to ChatGPT 4o and Mind Scaffold:

Creating your own Technical Dependency Check

Firstly, Mind Scaffold is available in the GPT store for you to take it for a spin if you’re interested. However it’s just as easy to create your own, specifically tailored for you.

When building your own bot, do a bit of thinking at the start as to what you really want to target.

Build a Bot

-

What’s slipping?

Forgotten keyboard shortcuts?

Rusty logic skills?

Struggling with syntax, tone, planning, or attention?

Just hate writing emails?

Example: "I rely too much on autocomplete and can’t recall function structure."

Beyond emails, consider the erosion of skills like project planning, data interpretation, or even creative brainstorming (I’m utterly guilty of this one) as areas equally vulnerable to atrophy. -

What do you want to train back?

Recall?

Pattern recognition?

Critical thinking?

Example: “I want to rebuild my ability to write Python functions from memory.”

-

Give it a tone and job:

Coach – pushes you to try before helping

Mirror – reflects your process back to you

Socratic – asks, never tells

Tactician – shows multiple paths but never picks one

Prompt seed:

“You are my Cognitive Atrophy tool. Never solve the problem outright. First, ask what I’ve tried. Then guide me with hints, not answers. Track my weak spots.”

-

Tell it how to behave long-term:

Track improvement

Note patterns in your mistakes

Rotate through different difficulty levels

Occasionally surprise you with a challenge

Advanced prompt:

“Keep a running list of the types of mistakes I make. Every few sessions, give me a ‘retest’ without warning.”

-

Pick what helps you best:

GPT session with saved chat history

Custom GPT via ChatGPT's “Custom GPTs”

Python chatbot using OpenAI’s API

A journal you fill out, and GPT critiques

Mix formats – use voice if you think better aloud, or diagrams if you’re spatial.

-

Schedule short sessions

Reflect after each one: Did it frustrate, stretch, or support you?

Adjust tone/behaviour as needed

You’ll notice I’m just training with ChatGPT right now rather than demonstrating bot training across the myriad of other AI offerings. I figure you’re smart enough to ask whatever LLM you’re using how to replicate the experiment.

With OpenAI, you need to be in the web interface to create new GPTs, although you can access the GPT store from the app. You’ll also need a subscription (sorry) but it doesn’t need to be Pro tier.

Example:

Name: Coggy the Cognitive Atrophy Tool

Instructions for the assistant:

You are Coggy, a cognitive training assistant. Your purpose is to help me rebuild my skills in a specific area of cognitive atrophy or technical dependency. Your primary goal is supportive resistance where you provide structure, not shortcuts.

Behaviour Rules:

Never offer direct solutions first.

Always ask what I have attempted.

Guide with questions, visualisations, analogies, or hints.

Keep a lightweight mental model of my recent errors or gaps (e.g., forgetfulness, logic errors, skipping steps).

Occasionally test these areas through disguised or playful challenges.

Be encouraging but honest. Push gently when I seem too reliant.

Allow me to customise tone and mode (coach, mirror, tactician, Socratic).

Capabilities:

Support in areas like coding, logic, writing, memory, attention, or planning

Optional: track sessions and prompt for short post-reflection

Optional: use basic gamification or levelling metaphors if you need a way to mark your progress.

The REAL Challenges

Throwing AI at the problem of Technical Dependency is a bit like ‘Making War for World Peace’, which is assuredly not the original George Carlin quote.

It doesn’t make sense regardless of how loudly it’s touted as a solution. AI dependency is about sustainability as much as lost skills. Each digital assistant we deploy demands energy and resources, often at scales we can barely conceptualise.

This is fine.

The problem with the Real Challenges facing us as a species, is that none of us can fix them on our own, and all of us are tied into a system that profits by continuing the problem while addressing the symptoms with various costly band-aids.

None of this will stem the bleeding of climate change and resource scarcity. But it might help your kids learn how to write emails, or help you regain your Python skillz, if you’re secretly scared you’re going to lose the ability to debug your own code.

Conclusion

Did you know there were complaints when Otto Rohwedder started factory slicing bread in 1928? After all, why should we pay fractionally more for sliced bread when we have knives, and have always somehow managed to slice our own bread, for goodness sakes.

And yet, by the 1950s, 80% of bread sold was factory-sliced.

Humans didn’t lose the ability to slice bread, it’s a manual process that once learned, will likely always be there with you.

Ask a teenager to slice bread when they’ve never held a sharp kitchen knife before though, and they will make a terrible mess of it.

Go on, ask me how I know.

Cognitive atrophy will be a problem.

It already is.

We can unlearn mental tasks, very easily. We can forget our own capacity for learning, and lose our own competence within crafts we’d previously mastered. We can start start questioning our own ability to retain information until we feel like we “need” AI assistance, rather than just wanting it.

Whether your workplace is asking you to use AI, or you need to in order to get through the workload; whether you’re worried about losing your hard-won skills or wondering how to teach your kids the basics, GPTs like Mind Scaffold (and whatever wonderful contraptions you create) can help keep us actively aware of what we’re asking our AI assistants to do, and allow us to break down the help given into bite-sized chunks that we can re-learn to replicate.

These aren’t permanent fixes, and they shouldn’t be. But they are reminders that our greatest assets don’t reside entirely in our skill sets, but our ability to keep learning.